From the transcript of Sean Carroll’s Mindscape program, 129| Solo: Democracy in America [h/t Bob Moore]

From the transcript of Sean Carroll’s Mindscape program, 129| Solo: Democracy in America [h/t Bob Moore]

0:15:13.8 SC: . . . . the idea that the election was stolen was made by a whole bunch of partisan actors, but it was also, I think, importantly, taken up as something worth considering, even if not necessarily true, by various contrarian, centrist pundits, right?

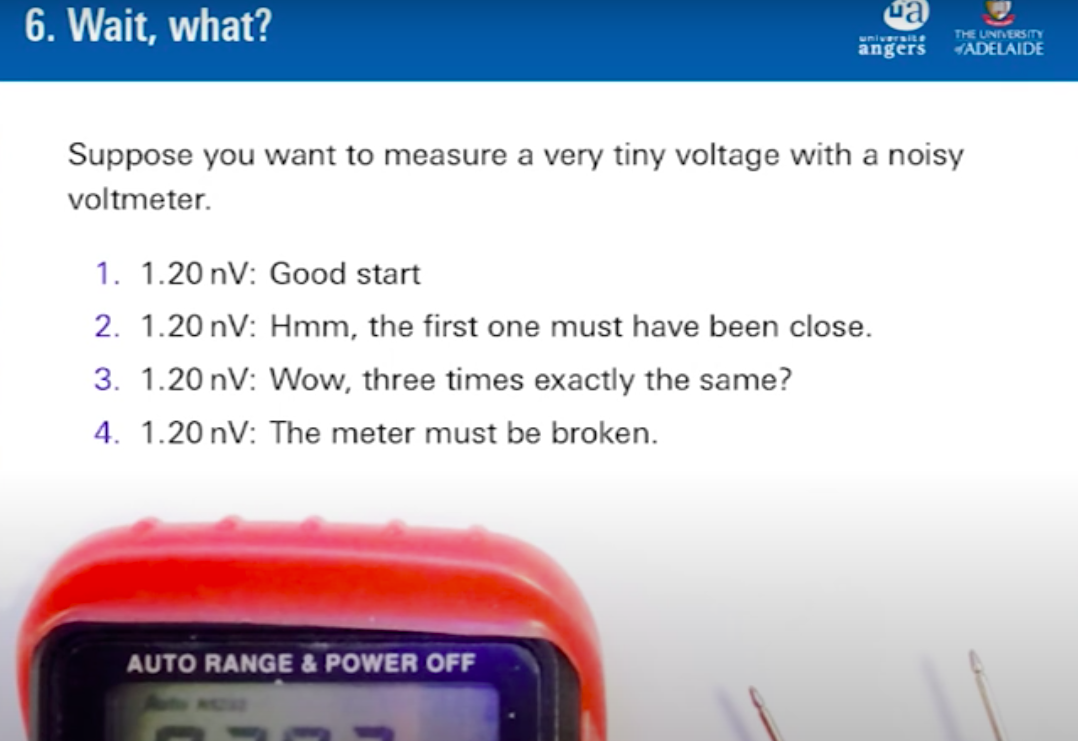

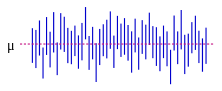

0:16:32.1 SC: . . . . So the answer I would have put forward is, “No. [chuckle] It was never worth taking that kind of claim seriously.” . . . . We like to talk here about being Bayesian, and in fact, it’s almost a cliche in certain corners of the internet talking about being good Bayesians, and what is meant by that is, for a set of propositions like the election was stolen, the election was not stolen. Okay, two propositions mutually exclusive, so you assign prior probabilities or prior credences to these propositions being true. So you might say,

-

-

- “Well, elections are not usually stolen, so the credence I would put on that claim my prior is very, very small.

- And the credence I would put on it not being stolen is very large.”

-

So we collect the data that will help us assess which proposition is the more likely. If the data is not what we would expect if X were true, then we revise our estimation that X really did happen. If the data we collect is exactly what we would expect to find if X were true, then we can be confident that X is indeed most likely true.

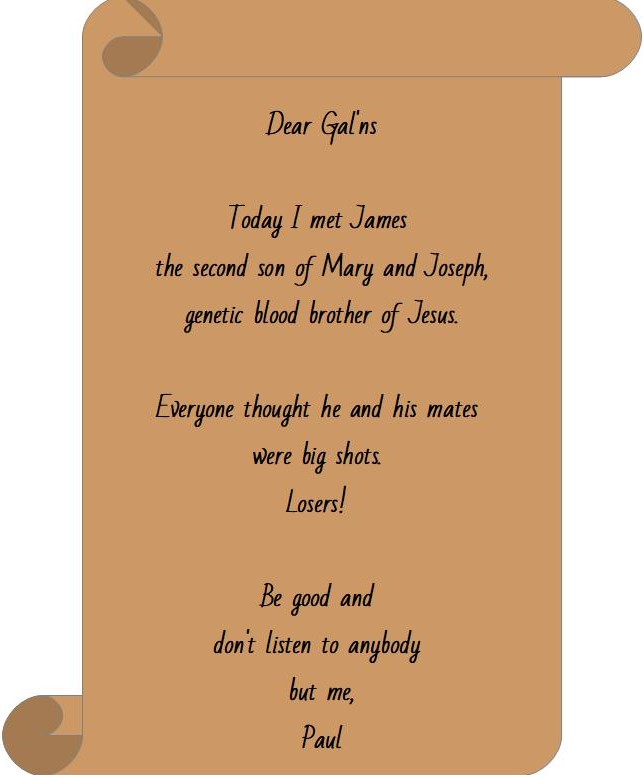

0:18:51.0 SC: . . . . So in a case like this where a bunch of people are saying, “Oh, there was election fraud, irregularities, the counting was off by this way or that way. It all seems suspicious.” You should ask yourself, “Did I expect that to happen?” The point is that if you expected exactly those claims to be made, even if the underlying proposition that the election was stolen is completely false, then seeing those claims being made provides zero evidence for you to change your credences whatsoever. Okay? So to make that abstract statement a little more down to earth, in the case of the elections being stolen, how likely was it that if Donald Trump did not win the election, that he and his allies would claim the election was stolen independent of whether it was, okay? What was the probability that he was going to say that there were irregularities and it was stolen?

0:20:19.6 SC: Well, a 100%, roughly speaking, 99.999, if you wanna be little bit more meta-physically careful, but they announced ahead of time that they were going to make those claims, right? He had been saying for months that the very idea of voting by mail is irregular and was going to lead to fraud, and they worked very hard to make the process difficult, both to cast votes and then to count them, different states had different ways of counting, certain states were prohibited from counting mail in ballots ahead of time. The Democrats were much more likely to vote by mail than the Republicans were, they slowed down the postal service, trying to make it take longer for mail-in votes to get there. There’s it’s a whole bunch of things going on in prior elections in the primaries, Trump had accused his opponents of rigging the election and stealing votes without any evidence.

0:21:15.3 SC: So your likelihood to function, that you would see these claims rise up even if the underlying proposition was not true, is basically, 100%. And therefore, as a good Bayesian, the fact that people were raising questions about the integrity of the election means nothing. It’s just what you expect to happen.

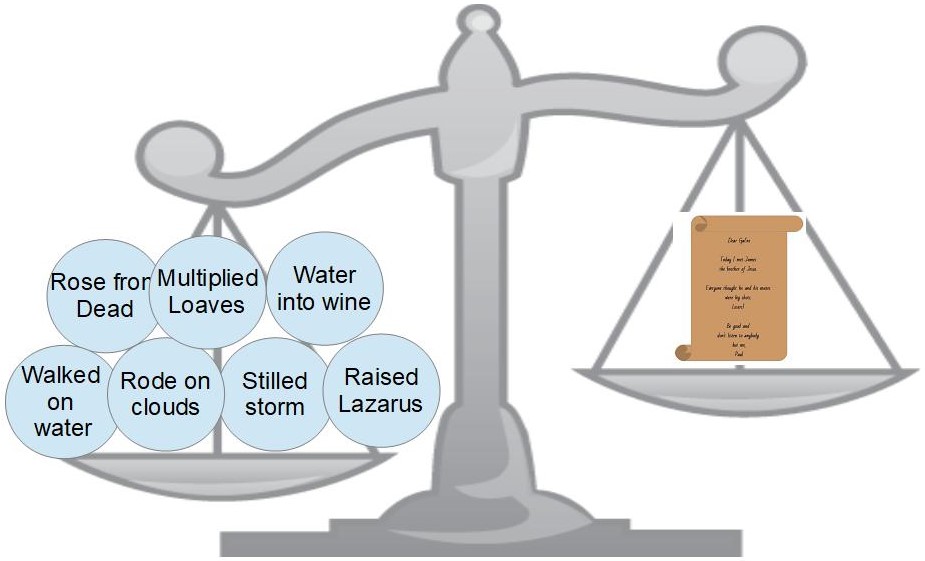

The data we need to see in order to take the claims of fraud seriously:

If you really want to spend any effort at all taking a claim like this seriously, you have to go beyond that simple thing, “Oh someone claimed that something’s going on, therefore it’s my job to evaluate it and wait for more evidence to come in.”

You should ask further questions, “What else should I expect to be true if this claim was correct?” For example, if the Democrats had somehow been able to get a lot of false ballots, rig elections, you would expect to see certain patterns, like Democrats winning a lot of elections, they had been predicted to lose different cities where or locations more broadly, where the frauds were purported to happen would be ones where anomalously large percentages of people were voting for Biden rather than Trump.

0:22:28.3 SC: In both cases, in both the idea that you would predict Democrats winning elections, they had been predicted lose and places where fraud was alleged to have happened would be anomalously pro-Biden it was the opposite. And you could instantly see that it was the opposite, right after election day.

-

-

- The Democrats lost elections for the House of Representatives and the Senate that they were favored to win.

-

So they were very bad at packing the ballots, if that’s really what they were trying to do.

-

-

- In cities like Philadelphia where it was alleged that a great voter fraud was taking place, Trump did better in 2020 than he did in 2016.

-

So right away, without working very hard, you know this is egregious bullshit, there is no duty to think, to take seriously, to spend your time worrying about the likely truth of this outrageous claim, all of which is completely compatible with every evidence, the falsity of which is completely compatible with all the evidence we have.

0:23:32.2 SC: So just to make it dramatic, let me spend a little bit of time here… Let me give you an aside, which is my favorite example of what I mean by this kind of attitude because it is very tricky. You should never, and I’m very happy to admit, you should never assign zero credence to essentially any crazy claim. That would be bad practice as a good Bayesian because if you assign assigned zero credence to any claim, then no amount of new evidence would ever change your mind. Okay? You’re taking the prior probability multiplying it with the likelihood, but at if the prior probability is zero, then it doesn’t matter what the likelihood is, you’re always gonna get zero at the end. And you should be open to the idea that evidence could come in that this outrageous claim is true, that the election was stolen, it’s certainly plausible that such evidence would come in.

0:24:21.9 SC: Now it didn’t, right, when actually they did have their day in court, they were laughed … out of court because they had zero evidence, even all the way up to January 6th when people in Congress were raising a stink about the election not being fair, they still had no evidence. The only claim they could make was that people were upset and people had suspicions, right? Even months later, so there was never any evidence that it was worth taking seriously. But nevertheless, even without that, I do think you should give some credence and therefore you have to do the hard work of saying, “Well, I’m giving it some non-zero credence, but so little that it’s not really worth spending even a minute worrying about it.” That’s a very crucial distinction to draw, and it’s very hard to do.