As my thesis partner and I gathered up the evidence we had collected, it began to dawn on us — as well as on our thesis advisers — that we didn’t have enough for ordinary, “normal” statistics. Our chief adviser, an Air Force colonel, and his captain assistant were on the faculty at the Air Force Institute of Technology (AFIT), where my partner and I were both seeking a master’s degree in logistics management.

We had traveled to the Warner Robins Air Logistics Center in Georgia to talk with a group of supply-chain managers and to administer a survey. We were trying to find out if they adapted their behavior based on what the Air Force expected of them. Our problem, we later came to understand, was a paucity of data. Not a problem, said our advisers. We could instead use non-parametric statistics; we just had to take care in how we framed our conclusions and to state clearly our level of confidence in the results.

Shopping for Stats

In the end, I think our thesis held up pretty well. Most of the conclusions we reached rang true and matched both common sense and the emerging consensus in logistics management based on Goldratt’s Theory of Constraints. But the work we did to prove our claims mathematically, with page after page of computer output, sometimes felt like voodoo. To be sure, we were careful not to put too much faith in them, not to “put too much weight on the saw,” but in some ways it seemed as though we were shopping for equations that proved our point.

I bring up this story from the previous century only to let you know that I am in no way a mathematician or a statistician. However, I still use statistics in my work. Oddly enough, when I left AFIT I simultaneously left the military (because of the “draw-down” of the early ’90s) and never worked in the logistics field again. I spent the next 24 years working in information technology. Still, my statistical background from AFIT has come in handy in things like data correlation, troubleshooting, reporting, data mining, etc.

We spent little, if any, time at AFIT learning about Bayes’ Theorem (BT). I think looking back on it, we might have done better in our thesis, chucking our esoteric non-parametric voodoo and replacing it with Bayesian statistics. I first had exposure to BT back around the turn of the century when I was spending a great deal of time both managing a mail server and maintaining an email interface program written in the most hideous dialect of C the world has ever produced.

As you probably know, one of the first email spam filters that actually worked relied on Bayesian inference. The biggest problem at the time was trying to find a balance between letting the bad stuff in versus keeping the good stuff out. A false positive can cause almost as much damage as an unblocked chunk of malware. “Didn’t you get my email?” asks the customer who took his business elsewhere.

Back then I didn’t give a damn about the philosophical difference between the frequentist statistics they taught us at AFIT and this up-and-coming rival. Nor did I care about their little feud. All that mattered at the time was that it worked. We still had false positives, but they were fewer. And it could learn, which was pretty impressive at the time. (We used SpamAssassin, by the way.)

The feud didn’t make a lot of sense to me at the time. It seemed more like a turf war than anything else. I mean, aren’t we all trying to discover the same thing? Isn’t probability the same, no matter which tools you use?

Well, actually no. It isn’t. The world is the same. The data are the same. But their perspectives and their outputs are fundamentally different.

What do we mean by “probability”?

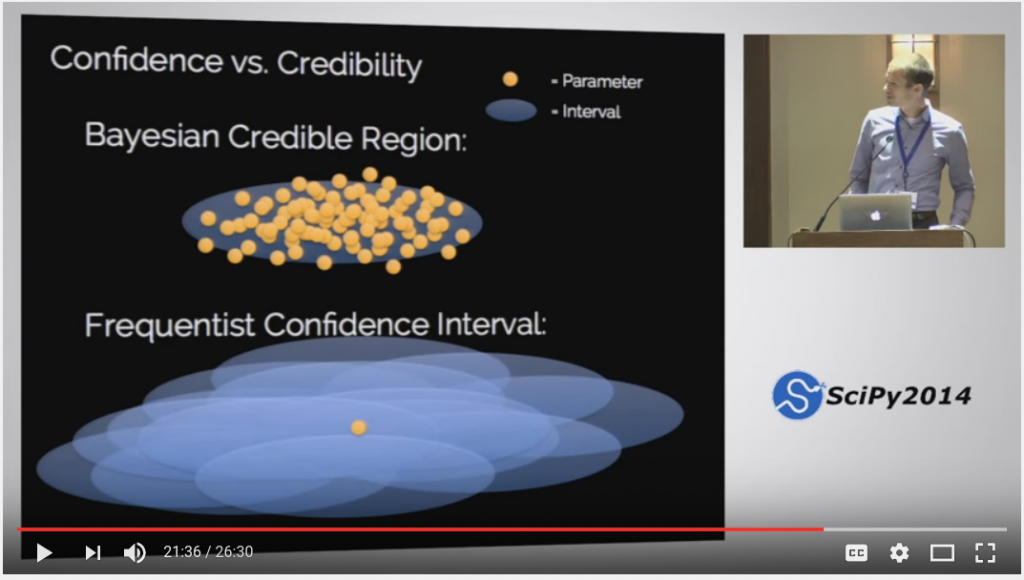

I’m a visual sort of person, so I often need to see something explained graphically before I get past that phase where I’m only repeating what I’ve memorized and reach that point where I can feel it in my gut. For example, in the video below, Jake VanderPlas, a scientist (astronomer) at the University of Washington, imagines the following conversation.

Statistician: “95% of such confidence intervals in repeated experiments will contain the true value.”

Scientist: “So there’s a 95% chance that the value is in this interval?”

Statistician: “No: you see, parameters by definition can’t vary, so referring to chance in this context is meaningless. The 95% refers to the interval itself.”

Scientist: “Oh, so there’s a 95% chance that the value is in this interval?”

And so they go round and round. You may recall writing papers in which you had to be extremely precise about saying what your stats actually proved. But other than professional statisticians, who really has a clear understanding about “confidence intervals in repeated experiments“? The reason the scientists (and the rest of us) keep getting it wrong is that the Bayesian description of probability is more natural.

The big takeaway is this: Frequentist results are not the same as Bayesian results, and if you don’t understand that, you may fundamentally misinterpret what one or the other is saying. Frequentism is not wrong; it’s just measuring a different thing. In fact, the two approaches have different definitions of probability.

As VanderPlas explains:

You’ve got to remember this: in general, when someone gives you a frequentist confidence interval, it’s not 95% likely to contain the true value. This is a Bayesian interpretation of a frequentist construct, and it happens all the time. You have to be really, really careful about this.

So now we understand that the Bayesian and the frequentist are talking about two different things. But for me it really hits home when I see it explained graphically.

The Bayesian imagines one credible region, and multiple parameters either fall inside or outside that region. The frequentist sees one parameter, a sort of Platonic ideal, with multiple confidence intervals that may or may not overlap that parameter. I invite you to watch and enjoy this fun, easy-to-watch video, in which I think VanderPlas absolutely nails it.

https://www.youtube.com/embed/KhAUfqhLakw

Tim Widowfield

Latest posts by Tim Widowfield (see all)

- What Did Marx Say Was the Cause of the American Civil War? (Part 1) - 2024-05-12 19:09:26 GMT+0000

- How Did Scholars View the Gospels During the “First Quest”? (Part 1) - 2024-01-04 00:17:10 GMT+0000

- The Memory Mavens, Part 14: Halbwachs and the Pilgrim of Bordeaux - 2023-08-17 20:39:42 GMT+0000

If you enjoyed this post, please consider donating to Vridar. Thanks!

Very nice presentation. I am totally stealing the billiard-ball example!

The illustration might be slightly hard to follow without also talking about data. Here is an illustration:

Suppose you want to know the probability that a particular elementary particle decay into another particle within 1 seconds. Let’s call that probability q.

So you go out and observe 100 particles for 1 second and observe that 84 decay. The “best guess” at q is then 84%, however obviously you can’t conclude q is exactly 84% — in other words, the actual value of q could (reasonably) be believed to lie in an interval [a,b] around the 84%. What both the Bayesian and frequentist is trying to do is to find that interval.

Here is what the frequentist does:

He says: I want to come up with a method that takes the dataset (84 of 100), and computes — by some formula– an interval [a,b]. I want that formula to do the following: If a million new groups of scientists go into a million different laboratories, and each group observes 100 particles and writes down how many decay, and each use my formula to compute their own interval [a,b], i want exactly 95% of the scientists to find intervals where q is actually within [a,b].

If that is true then the formula for computing [a,b] is called the 95% confidence interval. That’s what is illustrated in the bottom of his screen. Notice in this way of thinking q was considered fixed at all times, as it actually is (it is a constant of nature).

A Bayesian does the following:

He says: I don’t know what q, however for any interval [a,b] I can use Bayes theorem to compute:

P(“q falls within [a,b]” | 84 decays were observed out of 100)

He then goes looking for intervals [a,b] such that the above probability is 95% (how is not very important). In other words, in his opinion he is finding the probability of q (even though it is a constant of nature that does not vary) and is not interested in what a million hypothetical scientists might observe.

Where they disagree is that the frequentist might object to the Bayesian and say that there is no “probability of q” since q is a constant of nature, whereas the Bayesian says that this is because probabilities (on his interpretation of what probabilities are) reflect his subjective judgement of what q is given his present state of information. Round and round it goes and the two intervals they find are usually very similar.

The nice thing about the billiard ball example is that it’s very old — perhaps the original Bayesian thought experiment — and easy to visualize. I’m very big on visualization. For example, I used to love doing logic puzzles (e.g., “The man in the yellow house smokes Winston Filter Kings”), but I need to see the solution graphically. In the back of the puzzle magazines there’d be this wall of text that explained it all, but didn’t help me one bit.

In the next installment, I hope to explain that for a lot of cases, the solution range for frequentists and Bayesians overlaps, so it further contributes to the illusion that they’re talking about the same thing. But they aren’t. As you said, “the two intervals they find are usually very similar.” But things get rather tricky out at the edges.

I had not actually heard about it. Do you by any chance know who it goes back to? (LaPlace?). A somewhat similar famous old problem is the Bertrand paradox, which is the problem where you choose chords at random on a circle and try to compute the probability the chord is longer than the side of the inscribed equilateral triangle, however, it is both quite difficult to present the paradox (or the solution) because you got to define what a “random chord” is. The billiard example is a lot simpler and the solution is actually useful in other contexts.

On p. 8, Section II, Bayes describes the original problem. He didn’t call it a “billiard” table; later authors took liberties.

http://www.stat.ucla.edu/history/essay.pdf

Finally had time to get back to reading Vridar posts, but it was good timing to find this post at the top. Nice work, Tim, I look forward to watching the video and reading the next installment(s).

And a huge kudos to using “oddly enough” instead of incorrectly saying “ironically”. Bravo!