Gentleman Joe

Over on The New Oxonian, R. Joseph Hoffmann, leader of the Jesus Process©™® Triumvirate has deigned to comment on my post, “Proving This! — Hoffmann on Bayes’ Theorem.” As expected, his response is both cordial and understated. Ever the gentleman, he remains humble, even though Hoffmann’s massive and mighty brain threatens to burst through his shiny, pink forehead. At first I had considered answering him right there on his site. However, since I respectfully disagree with so much of what he has written, I have decided to create a new post here on Vridar instead.

I’ll quote chunks of Hoffmann’s words here, interspersed with my responses. He’s reacting to a comment by a guy who goes by the screen name “Hajk.” Hoffmann begins:

Yes @Hajk: I was laughing politely when Vridar/Godfey[sic] made the bumble about “pure mathematics” in scare quotes; it reveals that he is a complete loser in anything related to mathematics, and when he goes on to complain that Bayes doesn’t “fear subjectivity it welcomes it” may as well toss in the towel as far as its probative force goes. Odd, someone conceding your points and then claiming victory.

“All honourable men”

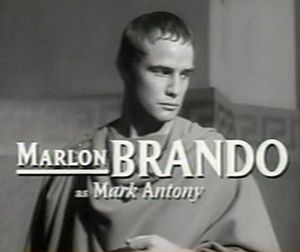

I have no doubt that he was laughing politely, since to do so derisively would be dishonorable. “And, sure, he is an honourable man.” (Julius Caesar, Act III, Scene 2)

However, condemning Neil Godfrey (with an “R”) as “a complete loser in anything related to mathematics” seems a bit harsh and hasty. First and foremost, since Neil didn’t write the post, he can hardly be held responsible for its content. The blame should fall instead to me, Tim Widowfield. Second, I was trying to make a rude joke, which I assumed Vridar readers would get. I used the scare quotes to signify my boorish disregard for credentialism. If that joke fell on deaf ears, then it merely demonstrates my lackluster sense of humor.

Of course I know the difference between pure and applied mathematics. (I admit I was a bit surprised to discover that Cambridge puts pure mathematics and mathematical statistics in the same department. In most U.S. schools, statistics and applied mathematics are grouped together.)

Branding me as “a complete loser” simply because of a failed joke is unwarranted. It would be as unfair as my saying that Hoffmann has reading comprehension problems because he didn’t notice the post’s byline, thus wrongly attributing it to Neil. It would be as petty and pedantic as my condemning Hoffmann as an illiterate clod simply because he spelled the word “them” as “tham,” and “Godfrey” as “Godfey.” Such peccadilloes are normally overlooked by honorable men. “And, sure, he is an honourable man.”

Now regarding “throwing in the towel” because BT accepts guesses in prior probability, I’m not sure how his conclusion follows from the premises. He seems to be saying that subjectivity automatically means “no probative force.” I’d like to know how he arrived at this conclusion.

Quod erat remonstrandum?

Hoffmann continues:

Even well-wishers of Carrier’s in various blog reviews have remonstrated that he should not have used the Jesus question as a test case, especially when the whole question of its application in historical studies is as yet unproved.

I had supposed that by now everyone knew why Carrier’s book on BT focuses the question of the historical Jesus. He was, for all intents and purposes, commissioned to do so. He writes:

This all started long, long ago, four years in fact, when my wife and I were buried under student loan debt and I offered myself up to complete any hard core project my fans wanted in exchange for as many donations as I could get to fund my work. They all unanimously said “historicity of Jesus” and came up with twenty thousand dollars. Which cleared our debt and really saved us financially. It was a huge boon and I am extremely grateful for everyone who made that happen. (http://freethoughtblogs.com/carrier/archives/255)

Joe must not have known that, or surely he would have said so. In any case, I’m not sure why it should matter. NT scholars and ancient historians will judge the matter on its merits, since it is the honorable thing to do. “For [Hoffmann] is an honourable man; So are they all, all honourable men . . .”

“Honk if you love cheeses!”

More from Joe:

Maybe we should plug in “Bayes is/is not useful in historical study” and see what happens with the probability.

Now that’s really deep. It ranks just slightly below “If guns are outlawed, then only outlaws will have guns,” but slightly above “If you see this van a-rockin’, don’t come a-knockin’.” Not bad.

Hoffmann follows with more deep thoughts:

Godfrey also doesn’t know the difference between statistical/mathematical and epistemological probability, but it is clear that some people making claims of the later [sic] variety are hoping to present tham [sic] as “certainties” in the former category.

As we’ve said before, not Godfrey, but Widowfield. I think rather than “later variety,” he means “latter variety.” As far as definitions, I suppose I’d go with Hacking:

- Epistemological Probability: “the degree of belief warranted by the evidence”

- Stochastic or Statistical Probability: “the tendency, displayed by some chance devices, to produce stable relative frequencies”

Now that we’ve sorted that out, what’s the accusation again? Apparently, he thinks I don’t know the difference, and that I (and others) want to make statistical probability claims and then present “tham” as “certainties” in the epistemological category. Having gone to all that effort to decipher the sentence, I’m still not sure why Joe has reached this conclusion. Anyone? Is it because he thinks frequentist statistics is the only “real” statistics?

And the trophy goes to . . .

More from Hoffman:

I also give you the trophy for the most rational BT comment of the day:

The parts in italics now are from Hajk, the recipient of Joe’s (virtual) trophy:

William Lane Craig thinks that the probability that God would raise Jesus from the dead is “inscrutable”. Should we plug [in] 50%?

I don’t think so; do you? Does anyone? First of all, I doubt Craig would get involved in this sort of discussion, since he probably knows where it would lead. Note that if we were trying to determine the probability that God raised Jesus from the dead, in 30 CE, that’s the conclusion. The numbers we’d plug in for prior probability would relate to the probability of an empty tomb signifying a reanimated corpse. So, how frequently do we think that sort of thing happens? Is it really 50 percent of the time?

Recall as well that Craig would classify the resurrection as a miracle. By definition miracles are physically impossible occurrences made possible by supernatural means. So if he were consistent, he would agree that the prior probability of a corpse being raised from the dead is zero — otherwise it’s just a very rare, albeit natural, occurrence. For the resurrection to have meaning in Christianity it needs to be a miracle (i.e., an impossible event made real by supernatural means), which happened only once and which will not repeat until Judgment Day. This simply isn’t Craig’s game.

On the other hand, if apologists should choose to play along, I think they’re going to be disappointed. Richard Carrier in this presentation explains it better than I can (cued up to 26 minutes and 48 seconds into the video):

[youtube=http://www.youtube.com/watch?v=HHIz-gR4xHo&start=1608]

I’ll assume you watched at least a few minutes of the above video. If you did, you should understand that the prior probabilities are what matter in the resurrection question. Given our experience in the world, what is the estimated frequency of corpse reanimation?

Before we leave the subject of bodily resurrection, let me recommend a highly entertaining paper by Robert Greg Cavin entitled: “Is There Sufficient Historical Evidence to Establish the Resurrection of Jesus?” (Caution: PDF.)

Jumping to conclusions

Hajt writes:

I may think the probability that documents survived from the destruction of Jersalem [sic] that became the basis of Tacitus’ knowledge of Christianity is 5%. You may think it is 0.0005%. I may think that the probability that something actually stood at the place where the Testimonium Flavianum now stands (after having read all arguments from both sides) is 3.87446%. You may think it is 0.845532%. J.P. Meier may think it is 50%. Crossan may think it is 42%. Josh McDowell may think it is 90%. Is Bayes going to tell us which to use? And if so, then it will only do so by assuming other probailities [sic] to begin with. How are we going to get these?

Once again, these are conclusions. Of course, the probability that something else stood in place of the TF is both a conclusion and a point of consensus. Nobody thinks Josephus believed Jesus was the messiah. Hence, something else must have stood where the TF now stands. Now, what was it? Was it more than what we have now? Or was it less? Or was it nothing at all? From what we know about Christian interpolators, what can we estimate for the prior probability? I should think a discussion of these prior probabilities would shake our confidence about the supposed reconstructed TF presumed by NT scholars as “probably what Josephus wrote.”

That is how ‘Plugging in different values gives us different answers’. But to Vridar, this is natural because it is a “common feature of equations.” So what?

The “so what” is this: Discussing the prior probabilities exposes our assumptions. If we can reach a tentative agreement on the foundations, then we can let the math do the rest. As I’ve said before, NT scholarship is rife with statements about things being probable or improbable. However, these statements are almost always nothing but intuitive assumptions.

The Sound and the Fury

Hoffman concludes:

As Morton Smith once said, I would rather put my trust in the myths of the Bible than in anything the mythics come up with: this is another example of hyperhypotheticalism with strong lashings of Macbeth 5.5 ( “. . . it is a tale

Told by an idiot, full of sound and fury,

Signifying nothing.”)

Since he invented the term, I suppose Hoffmann has the definition for hyperhypotheticalism. Perhaps it’s copyrighted. I’ll guess that it means taking hypotheticals to a ridiculous extreme. I’m a bit surprised we can have “another example” of a word that just got coined. We live in such interesting times.

[BTW, can anyone find a source for that Morton Smith quote?]

If you enjoyed this post, please consider donating to Vridar. Thanks!

Well, you don’t just use BT once. You use it every time you analyze new evidence. That is the entire reason for using BT, updating your prior when you get new evidence.

So for example, if we have two people who are dealing with the Synoptic Problem — one starts with a prior of 90% that Mark was written first and the other starts with a prior of 22% that Mark was written first — it almost doesn’t matter where you start at because accumulating all of the evidence will push the two priors towards each other. As long as your priors aren’t 0 or 1 since those aren’t probabilities. And actually, if your prior is really in line with reality, then it won’t move much no matter how much evidence is gathered. Things like that I wouldn’t expect people unfamiliar with BT to understand, but anyway here goes:

Person 1 has the probability that Mark was written first, or P(Mark) = 90%. P(Mark) for the second person is 22%. The first evidence they analyze is the length of Mark. It is a fact that Mark is the shortest Synoptic Gospel (MSSG), so this is our evidence E (if Mark being the shortest gospel was only hypothetical, then the logic behind Occam’s Razor would apply, not BT). How likely is it that Mark would be the shortest gospel given that Mark was written first? A historian might look at this by doing a large survey of ancient works and see how many of the newer versions are shorter than the older versions. I’m not a historian so I wouldn’t know, but I would guess that the usual way it happens is that original compositions are shorter than the ones derived from them, though there could be shorter versions that came after the long version (like what’s argued is Marcion’s relation to Luke, at least until relatively recently). The word “usually” could be quantified in some way somewhere around 80% (since I don’t have the experience with historical documents that actual historians do). The third value we need is the false positive rate, or how many times a shorter document is actually a rewrite of a longer document. Again, not a historian, but this doesn’t seem like it happens a lot (5%). So for the sake of example BT would look like this:

Person 1: P(Mark | MSSG) = P(MSSG | Mark) * P(Mark) / [P(MSSG | Mark) * P(Mark)] + [P(MSSG | Not Mark) * P(Not Mark)]

: = .8 * .9 / [.8 * .9] + [.05 * .1]

: = .9931

Person 2: P(Mark | MSSG) = P(MSSG | Mark) * P(Mark) / [P(MSSG | Mark) * P(Mark)] + [P(MSSG | Not Mark) * P(Not Mark)]

: = .8 * .22 / [.8 * .22] + [.05 * .78]

: = .8186

Both priors moved up, which makes sense since shorter documents are usually earlier versions of longer documents (but again, not a historian here).

With our new priors, we look at some other evidence. Like the content only found in Mark such as Mk 8.22-26. How likely is it that this pericope would be in Mark given Mark’s priority? How likely is it that this pericope would be in Mark given some other Gospel’s priority, or restated, Mark added this pericope after reading the other Synoptics?

Again, just for example since I’m not a historian, P(Mk 8.22-26 | Mark) is “probable” or 80% and P(Mk 8.22-26 | Not Mark) is “extremely improbable” or 1%.

Person 1: P(Mark | Mk 8.22-26) = P(Mk 8.22-26 | Mark) * P(Mark) / [P(Mk 8.22-26 | Mark) * P(Mark)] + [P(Mk 8.22-26 | Not Mark) * P(Not Mark)]

: = .8 * .9931 / [.8 * .9931] + [.01 * .0069]

: = .9999

Person 2: P(Mark | MSG) = P(MSG | Mark) * P(Mark) / [P(MSG | Mark) * P(Mark)] + [P(MSG | Not Mark) * P(Not Mark)]

: = .8 * .8186 / [.8 * .8186] + [.01 * .1814]

: = .9972

As you can see, the two priors are starting to converge. And you would repeat the process for each piece of evidence both for and against Markan Priority, with the priors changed from the previous use of BT (i.e. the posteriors) functioning as the priors for the next line of evidence.

This is a very good demonstration, J.Q.

Thank you.

I fear, however, that it will fall on reticent (if not altogether deaf) ears. The worst thing about academic hubris is that those who suffer from severe cases of it seldom allow for the mere possibility that they could ever be mistaken about even those things which only tangentially relate to their field of expertise or experience. If people like Huffman refuse to explore the nuanced utility and history of Bayesian methodology (a reading of McGrayne’s The Theory That Would Not Die would be a good start, for instance, after which he could delve deeper into the theorem’s demonstrable flexibility in application—yes, even in history!), there’s not much anyone can do to compel him to do so. Hubris is like that.

As the old aphorism goes:

“You can lead a horticulture, but you can’t make her think.”

At least the hubris is being documented for posterity.

I appreciate that.

“In most U.S. schools, statistics and applied mathematics are grouped together.”

Actually, when I was in school in the US, Statistics and (pure) Mathematics were separate departments sharing the same building, whereas Applied Mathematics was part of a department (with Applied Physics) in the engineering school. But that was eons ago.

Even in probability you can not get by without hypothesis and theory. Seeking good explanations is not subjective in the sense that anything goes. There are criteria and good theories, like the answer to good riddles, give you an o yes feeling of inevitability.

You can define probability as a purely formal theory – defining mathematical objects and rules of the game, but to use such formal maths for real situations implies a theory of reality.

An operational definition of probability in terms of frequency does not work as theory free either. Very many measurements, for example of what fraction of a particular nuclide decay, do converge, so we have a theory as to what fraction of the next nuclide will (bigger numbers enable better predictions of the fraction but actually poorer predictions of the exact number) but these predictions depend on the theory that we were not in a blip, and that laws with some uniformity can be used.

All probability usage, whether of the past or of the future depends on theorising, hypothesising, and seeking good explanations.

There is another fundamental difference between Epistemic and “Stochastic” (what I called ontological or objective) probability. 100% probability is reachable via objective probability. 2 + 2 has resulted in 4 100% of the times we’ve put 2 and 2 together. 100% probability has a totally different meaning in subjective probability. It doesn’t mean 100% confidence, it literally means infinite certainty.

This is the same sort of charge that religionist throw across the room at atheist when atheists claim to not believe in god. That an atheist is someone who is 100% certain that god doesn’t exist. Of course, no reasonable person has infinite certainty in anything, since they should always be open to changing their mind if new evidence to the contrary emerges. A person with 100% certainty can never change their mind while still following the laws of probability.

Another relevant quote:

Personally, I think using mathematical arguments is kinda pointless. If there is no proof there is no proof. You don’t need a convoluted mathematical argument to say ‘there is no proof’.

but leave it to ‘scholars’ to introduce math where its irrelevant.

Prosecution: “OJ killed Nichole.”

Scholar: I will prove it with Baye’s theorem!

Judge: Wouldn’t it make more sense to use DNA evidence?

Scholar: No. I will use the probability of a husband killing his wife and a lot of fuzzy math.

Mark Ferman: Good enough for me.

Ironically, your “joke” works against you. DNA evidence is not always clear-cut, despite what law enforcement spokesmen and the media would have you believe. Check out this short article from the Journal of Forensic Sciences” “How the Probability of a False Positive Affects the Value of DNA Evidence (warning: PDF).”

From the abstract:

“Errors in sample handling or test interpretation may cause false positives in forensic DNA testing. This article uses a Bayesian model to show how the potential for a false positive affects the evidentiary value of DNA evidence and the sufficiency of DNA evidence to meet traditional legal standards for conviction.”

Wait… there’s DNA evidence of Jesus that render’s Carrier’s application of Bayes obsolete?

No, but they have deduced His fingerprints on the Aramaic proofs of the original Gospel mss.

Ah, right, of course.

Grog . . . Right . . . right . . . because, even if such a thing was clear and true (Aramaic “fingerprints” — wtf that means), everyone knows that Jesus was the only Aramaic speaker in antiquity.

That is a dumb thing for Ehrman to say; it’s even dumber coming from you.

“but leave it to ‘scholars’ to introduce math where its irrelevant. ”

Math is always relevant. You couldn’t do any modern history without a strong foundation in causality and formal logic. How the hell do you think people take a batch of evidence and use it to conclude anything? Math. Not statistics, but formal logic.

Bayes Theorem is just a way to apply logic in cases where we don’t know the truth values of various propositions. We can adjust the truth values of those propostions based on probabilities and get a reasonable idea of how probable a proposition is to be true. That’s all it is – a formal framework to provide guidance in those cases that degenerate down to “you’re wrong”, “no YOU’RE wrong you big doo-doo-head” that crop up in discussions where evidence is not clear-cut (or where agreement on what evidence MEANS is not clear-cut because arguments based on formal logic break down in the face of “I think this is true” vs. “No, you’re wrong, this other thing is true” style “arguments”).

Also this:

“You don’t need a convoluted mathematical argument to say ‘there is no proof’.”

No, but you do need to get people to agree to what evidence is relevant. And what it means. Formal logic can help you with that sometimes, but in cases where there is argument over what the evidence actually means, Bayes Theorem gives you a tool to be able to choose between possibilities like “yes, if this evidence means what I think it means it is meaningful to our discussion” vs. “even if this evidence means what I think it means, it doesn’t change a damn thing about what we’re arguing about.” And that’s an important tool if it can be used to strengthen an argument.

1. Biased gospels written by a church that worships him.

2. Biased epistles written by a church that worships him.

3. Biased apocryphal literature written by ‘heretics’ who worshiped him.

4. Statements in Tacitus, etc. that are clearly only derived from Tacitus or whoever having heard a Christian talk about him, certainly not from firsthand knowledge nor any official Roman records of his crucifixion or any such thing.

5. Statements in the Talmud or other Jewish literature that clearly doesn’t come from any firsthand knowledge nor any official documents, but clearly only from what some Christian says or from a Jew having read the biased gospels mentioned in #1 and twisting the story to make it irreverent.

None of this evidence is firsthand in any way. So its all irrelevant. Ok, so first hand versus 2nd hand is math…I suppose. But its not the kind of convoluted math ‘scholars’ use.

Bayes Theorem is part of the methodology of forensic DNA testing. It is used to calculate the probability of the test participant as the biological father of the child/children in paternity cases.

DH

I expect any moment now that reyjacobs will return and admit math *is* relevant in the evaluation of evidence.

What proof do we have of Jesus’ existence?

1. Biased gospels written by a church that worships him.

2. Biased epistles written by a church that worships him.

3. Biased apocryphal literature written by ‘heretics’ who worshiped him.

4. Statements in Tacitus, etc. that are clearly only derived from Tacitus or whoever having heard a Christian talk about him, certainly not from firsthand knowledge nor any official Roman records of his crucifixion or any such thing.

5. Statements in the Talmud or other Jewish literature that clearly doesn’t come from any firsthand knowledge nor any official documents.

I don’t need math to see that this kind of evidence amounts to nothing.

Tim,

Having seen no reply to my several comments (e.g. 6. above) I await further notice of the status of our purposed off-site discussion.